Why We Need to Scale Learning: Unveiling the Wisdom Gap Series

The Imperative to Scale Learning

In a world characterized by rapid technological advances and sociopolitical complexities, we find ourselves at a pivotal juncture. The gap between the challenges we face—whether societal, organizational, or individual—and our collective ability to address them is widening at an alarming rate. This is the "Wisdom Gap," a term that succinctly encapsulates a critical shortfall with far-reaching consequences.

In failing to address this gap, we risk not only ineffectual problem-solving but also the perpetuation of issues that could otherwise be mitigated or resolved. Put simply, we need to rapidly scale learning to build the skills we need to tackle the Wisdom Gap and address pressing issues facing individuals, teams, and society. Fortunately, learning theory and research can point us in the right direction and learning technology can scale our efforts.

The Wisdom Gap Series: An Overview

Given the multifaceted nature of the Wisdom Gap and how to address it, a single post can hardly do it justice. That's why we're introducing a weekly series aimed at unpacking the complexity of the Wisdom Gap. Through this series, we'll explore:

- The societal, organizational, and individual impact of the Wisdom Gap

- Strategies and methodologies for narrowing the Gap informed by learning theory and research

- The key role of metacognition and self-awareness in learning how to make wiser decisions to address or avoid problems

- The impact of emerging technologies, particularly Artificial Intelligence, on the Wisdom Gap and our capacity to narrow it

Defining the Wisdom Gap

The Wisdom Gap is not merely an abstract concept; it's a tangible, quantifiable disparity. It manifests in various domains—from public policy failures to corporate scandals, from ethical dilemmas in AI to unsustainable environmental practices. At its core, the Wisdom Gap represents the gulf between our current capabilities and the skills and understanding required to address increasingly complex challenges effectively.

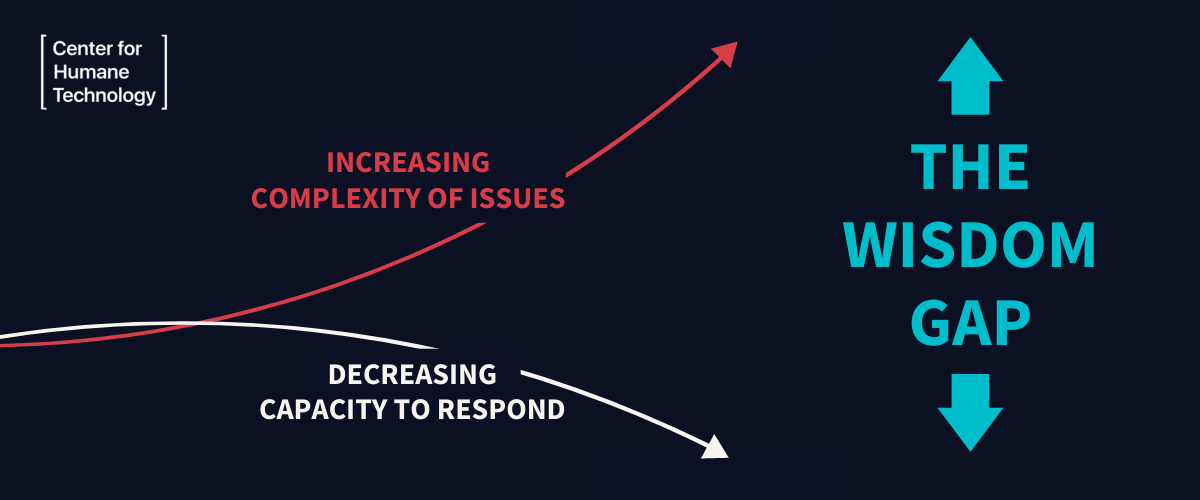

The Wisdom Gap can grow or shrink based on changes to the complexity of the issues we face and our capacity to respond. The image below from the excellent post from the Center for Human Technology shows the Wisdom Gap between the red line (complexity of issues) and white line (capacity to respond) increasing over time (left to right).

This increased gap over time is due to both the increasing complexity of the issues we face and our decreasing capacity to respond at a societal level. Disruptive technologies (e.g., Social Media and Artificial Intelligence) can (at least initially) pack a one-two punch — growing the complexity of the issues we face and decreasing our capacity to respond. However, there is hope that we can learn ways to control and leverage these same disruptive technologies to help narrow the wisdom gap.

Why the Wisdom Gap Matters

Ignoring or underestimating the Wisdom Gap can result in a cascade of adverse outcomes including:

- Ineffective Solutions: When we don't fully grasp the problems we're trying to solve, our solutions often miss the mark.

- Misallocated Resources: A lack of wisdom can lead to the poor distribution of time, capital, and human resources, compounding issues rather than resolving them.

- Ethical Oversights: When wisdom is lacking, ethical considerations may take a backseat, leading to decisions that prioritize short-term gains over long-term integrity.

- Organizational Decay: For companies, the Wisdom Gap can manifest as stunted innovation, low employee engagement, and ultimately, a decline in competitiveness and increased risk of organization demise.

In summary, unwise actions lead to bad outcomes. If we don't address the Wisdom Gap, we won't be able to address issues well and should expect to be broadly unhappy with the future. A future we want is far from guaranteed.

The stakes are high, and the immediate future can swing either way—toward massive improvements or significant setbacks for each of us, the organizations we're part of, and human society at large. While people can disagree on what would count as an improvement or setback, there are futures that almost everyone would want to avoid for themselves and the organizations or societies they belong to.

Consider the lifespan of a society or company. On average, societies last less than 400 years and companies listed on the S&P 500 last less than 20 years. Societies and companies can decline or dissolve, even when most members strive to prevent it. Check out Ray Dalio's book (or the video below) that dives into cycles in the world order and current implications.

In Will Humanity Choose Its Future, Guive Assadi defines the concept of an evolutionary future, which he sees as reasonably likely.

An evolutionary future is a future where, due to competition between actors, the world develops in a direction that almost no one would have chosen.

Avoiding a future that "almost no one would have chosen" seems like something almost everyone would choose. So how do we make it more likely that we will choose our future? We need to scale learning.

A Call to Action

Addressing the Wisdom Gap is not just an intellectual exercise; it's a societal, organizational, and individual imperative that, for many of you in talent or learning-focused roles, is a key mandate for you and your team. As we move forward in this series, we'll not only dissect the intricacies of the Gap. We'll also provide actionable strategies that are informed by learning theory and research and supported by the latest learning technology.

What's Next?

Our next instalment will delve deeper into the organizational and societal implications of the Wisdom Gap. We'll explore real-world examples that illustrate its impact and discuss why closing this Gap is crucial for charting a future that we want.

Stay tuned, and we welcome your feedback via comments, our LinkedIn page, Twitter/X, or contacting us directly. Your feedback is crucial to helping us make these posts as useful as possible and, ultimately, driving the mission of ScaleLearning.

Comments ()